Beyond the Hype: A Pragmatic Technical Framework for Understanding and Building Enterprise-Ready Generative AI Systems

Since the launch of ChatGPT, businesses and enterprises have been exploring ways to implement large language models into their organizations. However, for non-technical stakeholders, it can be challenging to grasp how all the components of generative AI fit together into a cohesive system.

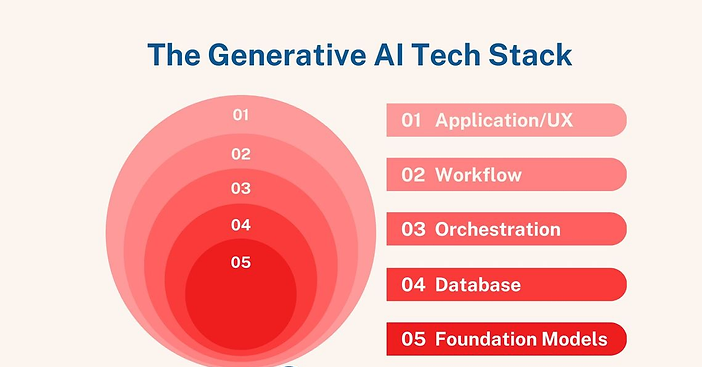

To bridge this gap, this article introduces the Generative AI Tech Stack - a conceptual model for understanding the layers that comprise a complete generative AI solution. By structuring the stack into logical components, we aim to provide executives, managers, and other business leaders an accessible overview of how the parts interconnect.

The Generative AI Tech Stack model is ordered from the bottom up, beginning with the foundational large language models that power AI text generation. It then adds layers like databases, integration, and orchestration that augment the AI with real-world data and services. At the top sit user applications and interfaces that deliver customized experiences powered by the AI stack.

While abstracted, the stack presents the core building blocks of an enterprise-ready generative AI system. The goal is to demystify how seemingly magical text AI can be harnessed as a practical business tool. With the stack as a guide, leaders can better grasp generative AI's capabilities and limitations to plan effective strategies grounded in reality.

By contextualizing the technology within this understandable framework, the Generative AI Tech Stack aims to spark informed discussions about AI's immense possibilities and responsible implementation across organizations.

Foundational Models: LLMs Underpin the Generative AI Revolution

At the base of the Generative AI Tech Stack are Large Language Models (LLMs) like GPT-3 and Anthropic's Claude. These neural networks with billions of parameters have sparked the generative AI revolution by producing remarkably human-like text.

LLMs are trained on massive text datasets to deeply understand the nuances of natural language, including grammar, semantics, and how context influences meaning. This knowledge allows LLMs to generate coherent, sensible responses to prompts, powering applications like chatbots, content generation, and semantic analysis.

However, raw unchecked LLMs can sometimes hallucinate false information or lack necessary real-world knowledge. This demonstrates the need for additional components in the full generative AI stack.

LLMs serve as foundation models - general-purpose models pre-trained on huge datasets. Foundation models like GPT-3 and DALL-E establish a strong baseline capability in text and image generation respectively.

To customize foundation models for specific domains, they can be fine-tuned with relevant datasets. This aligns their outputs to specialized tasks like answering product queries, generating code, or leveraging proprietary APIs.

These ever-advancing foundation models drive breakthroughs across modalities in generative AI. They provide the critical starting point that the rest of the tech stack builds upon to create tailored, useful applications.

Customization vs Convenience: Foundation Model Source Options

When implementing the Foundational Models layer, one of the first decisions is whether to utilize open-source or closed-source foundation models or a combination.

Open-source models like GPT-NeoX allow full customization and can be trained on sensitive data where privacy is critical. However, open source requires setting up infrastructure and compute to run the models.

Closed source models like GPT-3 offer cutting-edge capability accessible via API keys and subscriptions, without infrastructure worries. But customization is limited and SLAs must be evaluated.

Weighing the benefits of innovation versus control, many choose hybrid strategies employing both major closed-source providers and open-source models fine-tuned for niche tasks. Upfront thought to open vs. closed tradeoffs clarifies options at the start when architecting for scale.

Database/Knowledge Base Layer: Enable Contextual Responses

On top of the raw power of foundation models sits the Database/Knowledge Base layer. This component allows grounding of generative AI in real-world facts and data.

Databases store organized structured data like customer profiles, product catalogues, and transaction histories. This data provides the critical context needed for the generative AI to produce useful, personalized responses.

For example, a customer service chatbot can use customer purchase history from a database to make relevant product suggestions. A generative content tool can pull current pricing data to autocomplete promotions in an email.

Knowledge bases codify facts, rules, and ontology to augment the world knowledge in foundation models. They provide an evolving memory that prevents repeating mistakes or hallucinating false information.

To leverage this knowledge, databases must support efficient vector similarity searches. This allows for linking related concepts and quickly retrieving relevant context.

The Database/Knowledge Base layer enables generative AI to be truly contextual. It ensures responses are grounded in facts rather than pure imagination, delivering accurate information tailored to the user.

Orchestration Layer Combines Data and Services

Sitting above the Database layer is the Orchestration Layer. This component seamlessly combines relevant data and services into the context of generative AI.

The orchestration layer pulls data from diverse databases and APIs in real time to enrich the context. This data augmentation provides the models with customized information for highly relevant responses.

For instance, an e-commerce recommendation tool could retrieve user browsing history, inventory, and pricing data from various systems. Integrating this data ensures the most useful, in-stock products are suggested.

The orchestration layer also handles any required data transformations into the vector format needed by generative AI. Unstructured data like emails or logs can be encoded as usable vectors.

Additionally, the layer invokes any external APIs to further enrich the context as needed. API calls for validation, authentication, or business logic inject context before querying the AI.

By smoothly orchestrating data, transformations, and services, the Orchestration Layer tailors the context to each user situation. This powers the Generative AI Tech Stack to provide personalized, context-aware responses.

Workflow Layer Creates Business Value

Sitting on top of the Integration Layer is the Workflow layer. This logical layer coordinates invoking the optimal generative AI models and components to complete a given workflow.

Within the Workflow Layer, concepts like chaining, guardrails, retrieval, reranking, and ensembling combine to achieve robust outcomes from generative AI:

AI Agents: Goal-driven LLM instances with remarkable reasoning capacities

Chaining sequences of multiple LLMs and additional modules into an orchestrated workflow to reliably generate the desired output.

Generative AI Networks: GAIN is a Prompt Engineering technique to solve complex challenges beyond the capabilities of single agents.

Guardrails monitor LLM responses and steer them back on track if errors or unsafe content are detected.

Retrieval grounds the LLM in facts by retrieving relevant knowledge from databases as needed.

Reranking generates multiple candidate responses from the LLM which are reranked to select the best, most relevant one.

Ensembling combines outputs from multiple LLMs to improve consistency and accuracy over any single model.

Generative AI Networks (GAINs) GAIN is a Prompt Engineering technique to solve complex challenges beyond the capabilities of single agents. Prompt EngineeringSunil Ramlochan - Enterprise AI Specialist

Comments